This is the story of my home server. How it got to be, how it evolved and what are the plans for the future. It does what a typical home lab/home server does these days: network attached storage (NAS), media streaming, virtualization, web server, gaming server, home automation... Even this blog runs on it.

How it all began

The origin story of the home server is a typical one. The home desktop PC got upgraded couple of years ago and the old one got re-used. It was actually my son's idea and i went along with it. He got tired of running a Minecraft server on the desktop and suggested that we make the old one into a dedicated Minecraft server when the time comes to upgrade the PC. One that he could leave on overnight. Long story short, we have some hardware for the server:

- MS MS-7788 motherboard

- Intel i5 2400 CPU

- 8GB of RAM

- 1TB WD SATA hard disk

- Nvidia GeForce GT620 (The "production" GPU card (1050 Ti based one) went into the new desktop, until a suitable newer one became available. This all was happening during the big GPU shortage).

The system has no use for the GPU except to provide HDMI output to hook up to the monitor that has no DVI, nor VGA inputs (the only available onboard outputs). The case already had some additional fans in the front making it perfect for a 24x7 operation. Bear in mind that we are talking about hardware from 10-12 years ago. Front fans weren't a thing then ;-) 🔝

Initial install

We have the hardware, lets go and install the software. The Minecraft server was going to be my son's pet project. He was going to install and maintain it. Ubuntu Linux was the OS of choice. On the other hand, as soon as this hardware become available, i started having ideas of my own. The old Raspberry Pi3 with OSMC and an external WD Elements drive wasn't quite cutting it any more being a media player, a NAS, a remote access device at the same time. It was struggling as a media player alone.

Although we could have shared the server quite easily, it was going to be a mess so we decided to install a virtualization hypervisor first. A Type 1 hypervisor. As i has previous work experience with VMWare's ESXi we went with it. Additionally, i have experienced VMWare certified engineers at work, should i find myself in a pickle and need assistance. From today's point of view, i should've at least tried Proxmox.

Couple of hours tinkering with registering and obtaining the free license and making an installation media that supports the onboard RTL8111 NIC we got the ESXi up and running. We installed it on an USB flash drive leaving the whole 1TB drive for datastore. After a crash course in ESXi basics, my son got himself a Minecraft server he always wanted and i got myself a playground ;-)

Not long after the WD Elements USB drive from the RPi got donated to the "server" making it a second local datastore. The idea was to run some file sharing VM serving the content to the home network. Initially, it was supposed to be just another Ubuntu VM with Samba and NFS, but very soon it became a quest for a NAS system that can be run as a VM. FreeNAS/TrueNAS, Openmediavault, Unraid and Xpenology were the most popular ones. Unraid got discarded right from the start for not being free. TrueNAS's minimum hardware requirements were just too much. Xpenology looked interesting, but is looked like the full potential could be unleashed only with a bunch of dedicated physical drives and passthrough, that i didn't have (yet). 🔝

OMV it is :-)

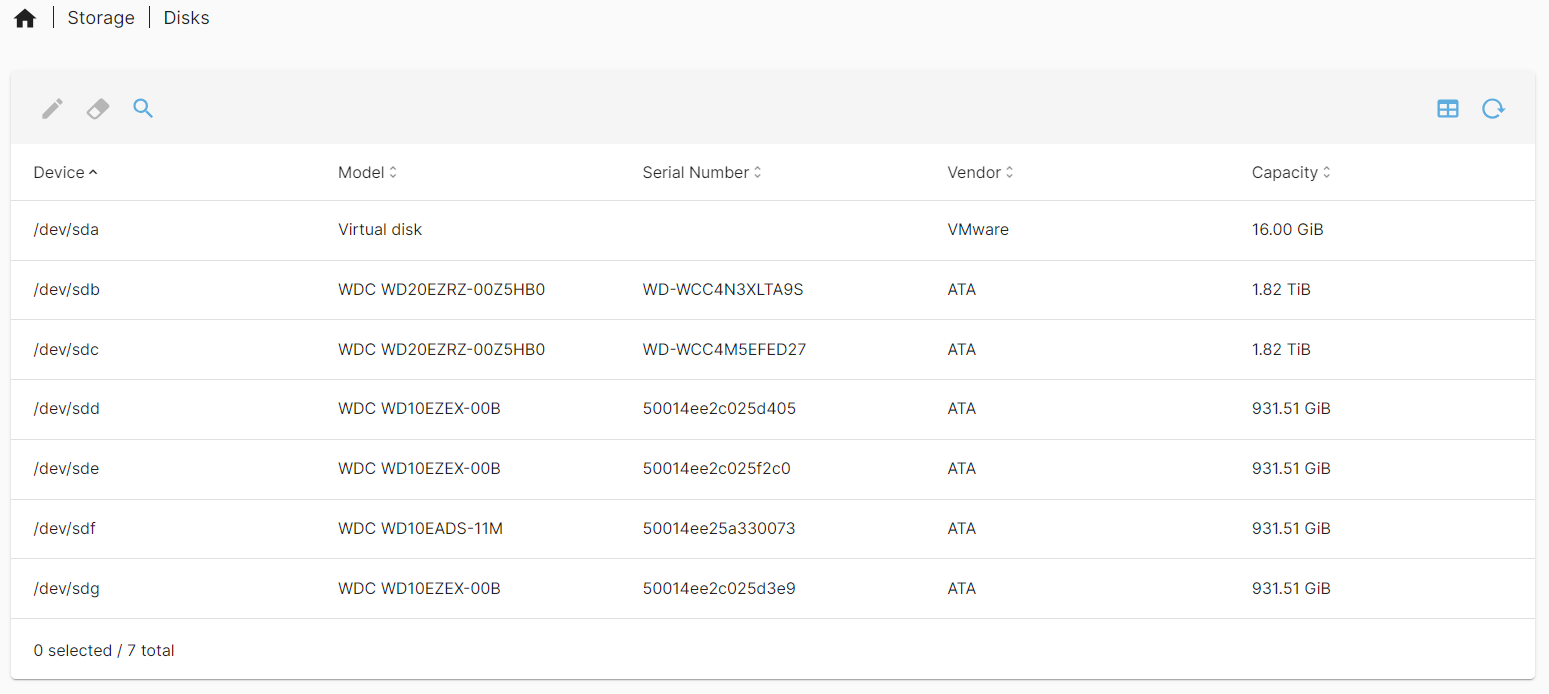

We got Openmediavault up and running, we created a couple of virtual disks on both physical datastores and gave it a try with various combinations of mdadm RAID arrays. The system was easy enough to use and configure so we decided that it was here to stay. Not long after, i stumbled upon a great deal for a couple 2TB WD Blues and some DDR3 RAM sticks. The homeserver got it's first official upgrade. We now have:

- 16GB RAM (dual channel)

- 2x 1TB SATA HDD

- 2x 2TB SATA HDD

I went for the following HDD setup:

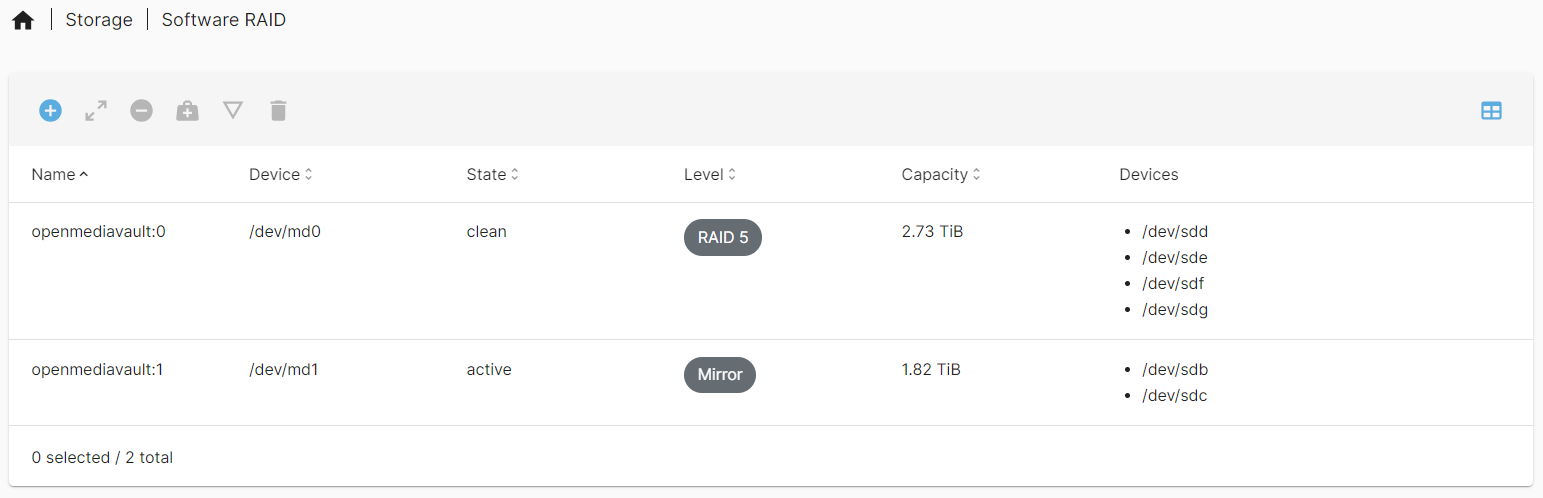

- RDM passthrough the 2TB disks to the OMV virtual machine and create a RAID1 mirrored array. This was for the important stuff. Data needing protection.

- 2x 500GB vmdk virtual disks, each one on a different physical datastore and make a RAID0 striped array in OMV. Non protected. For the casual stuff.

- 2x 500GB of datastore space (minus the active machines) for VM storage.

As i wanted to provide file sharing service to the other VMs as well as the rest of the network, i created a dedicated server-to-server network within the ESXi for file sharing, with jumbo frames and everything.

And for quite some time, this was the setup of my lab. The virtual machines (all Linux) would have virtual disks on both datastores with mdadm RAID1 within the OS for protection in case of a disk failure. It would still require some reconfiguration to power up the VMs after a disk failure, but the data would still be there. During this period, the homeserver became a home to various services: the Minecraft server, OMV NAS, Home Assistant, GNS3 network simulator, an hostapd based WiFi access point (with passthrough USB WiFi card), DLNA media server (that eventually got scrapped in favor of a Jellyfin instance with clients on our devices, including Kodi), Zabbix monitoring of the whole "infrastructure"... There was a plan for local DNS and DHCP servers, but i didn't feel that comfortable in the robustness of the system. In case of a failure, none of us could easily get on the network with our devices. More on this later... 🔝

The twist

As time went by, the server got itself couple of more 1TB disks and a plan for the future. Since the motherboard has only 4 SATA ports, it got an HBA too. An el-cheapo Chinese ASM1062 based 2-port SATA controller from Aliexpress. As the HBA wasn't supported by ESXi i ended up passing through the whole PCI card to OVM instead of just the disks, an this worked just fine. The whole setup was OK and functional, but there was always this thing that bothered me. Why couldn't i utilize the RAID protection from the NAS for my virtual machines. Having to configure mdadm raid on reach new VM is a bit of a pain. And this is where the twist comes into play...

Usually, when you have and all-in-one homeserver with NAS and virtualization it is the NAS that gets installed directly on the hardware, takes care of RAID and data protection, and provides virtualization services (usually through KVM) on top. In my case, since i already had ESXi running on the machine and the NAS was already a virtual machine, i decided to try another approach. Have some sort of a networked storage service that runs on top of the RAID1 array presented to the ESXi host internally (through the server-to-server internal network) and have a RAID protected (by OMV) datastore on top of that. Why wouldn't it work? All it needed was another vmk interface and some networked storage protocol. It was either NFS or iSCSI and OMV supported them both. And both worked, but iSCSI proved to be a bit of a pain for configuration and maintenance, especially since i didn't bother to make the arrays part of LVM, so i can carve up logical volumes for different purposes (e.g. iSCSI targets). Furthermore, since performance-wise they were more-or-less similar i decided to go with NFS.

And it worked. The only practical problem is during restarts of the server. The OMV VM needs to start first, NFS datastores need to be mounted and only then the other machines would become available and can be started. Shell programming to the rescue. ESXi has this script (/etc/rc.local.d/local.sh), run during startup, that is executed before the system goes through the VM autostart list. If only one could power up the OMV from there, wait until the datastores are available, mount them and continue with the startup... As it happens... one could ;-)

The initial concept was borrowed from this post.

logger "local.sh: Powering up OpenMediaVault VM..."

vim-cmd vmsvc/power.on $(vim-cmd vmsvc/getallvms | grep 'OMV' | sed 's/^\([0-9]*\) .*$/\1/')

if esxcli system maintenanceMode get | grep -q 'Disabled'

then

logger "local.sh: Is OMV up?"

# We wait until vmware tools are running on the guest machine in 30 secon intervals

until vim-cmd vmsvc/get.summary $(vim-cmd vmsvc/getallvms | grep 'OMV' | sed 's/^\([0-9]*\) .*$/\1/') | grep toolsStatus | grep -q toolsOk

do

logger "local.sh: OMV is not up yet. Sleeping 30 seconds..."

sleep 30

done

# we "sleep" another 30 second for good measure. Vmware tools are not a guarantee that all the services are up...

sleep 30

logger "local.sh: OMV is up and running... Mounting NFS datastores..."

# We try to mount the datastores. If it fails, we wait for 10 second then try again

until esxcli storage nfs41 list | grep NFS_datastore | awk '{print $5}' | grep -q true

do

esxcli storage nfs41 remove -v NFS_datastore

esxcli storage nfs41 add -H 10.0.0.10 -s 2TB_RAID1/iSCSI_images -v NFS_datastore

sleep 10

done

logger "local.sh: NFS_datastore mounted"

until esxcli storage nfs41 list | grep IMAGES | awk '{print $5}' | grep -q true

do

esxcli storage nfs41 remove -v IMAGES

esxcli storage nfs41 add -H 10.0.0.10 -s 2TB_RAID5/IMAGES -v IMAGES

sleep 10

done

logger "local.sh: IMAGES mounted"

logger "local.sh: OMV is up... waiting 30 seconds for the system to stabilize..."

sleep 30

fi

logger "local.sh: Completed. Continuing boot..."

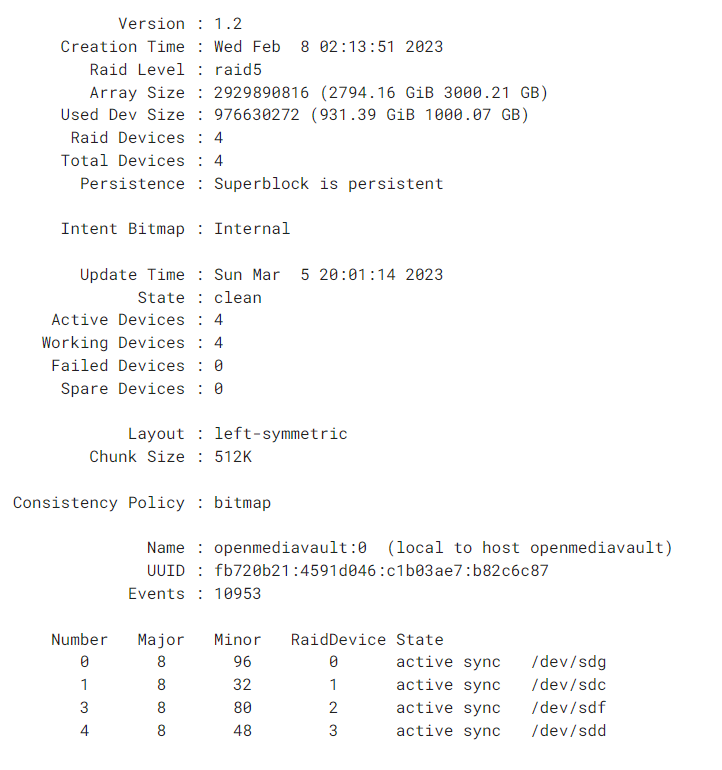

exit 0Armed with the new startup script i went on and reconfigured the whole storage. The system now has 4x 1TB and 2x 2TB disks. The 1TB ones are on the on-board SATA controller, and the 2TB ones are on the el-cheapo HBA...

- All the VMs, except OMV got migrated to the RAID1 NFS share

- 3 out of the 4 1TB drives got passedthrough to OMV. A new RAID5 array got created in the place of the 2x 500GB RAID0. The data got moved back and forth during this process.

- The fourth 1TB drive was left as a local datastore to ESXi. One local datastore is required to host the OMV boot/root disk and VMX configuration file and this was it.

So far, so good. Now all the VMs, not only Linux ones, got to have protection from disk failures. Except OMV... :-( 🔝

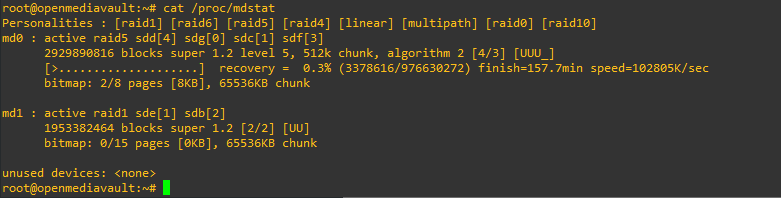

More twists

I was quite happy with the new setup, but one thing still bothered me (there were other things, as well, but this one was bothering me a lot). There is this 1TB disk serving as a datastore for a 16GB vmdk for OMV. Just look at this unused disk potential. But what could i do? I couldn't just add another disk, there were no SATA ports left, nor there were PCI slots available for another HBA. I ended up adding one new USB flash as a local datastore, moved the OMV VM to it and added the fourth 1TB disk to the RAID5 array. Finally, all the hard disks were part of the NAS, protected with RAID and serving both user files and VMs.

The first real test

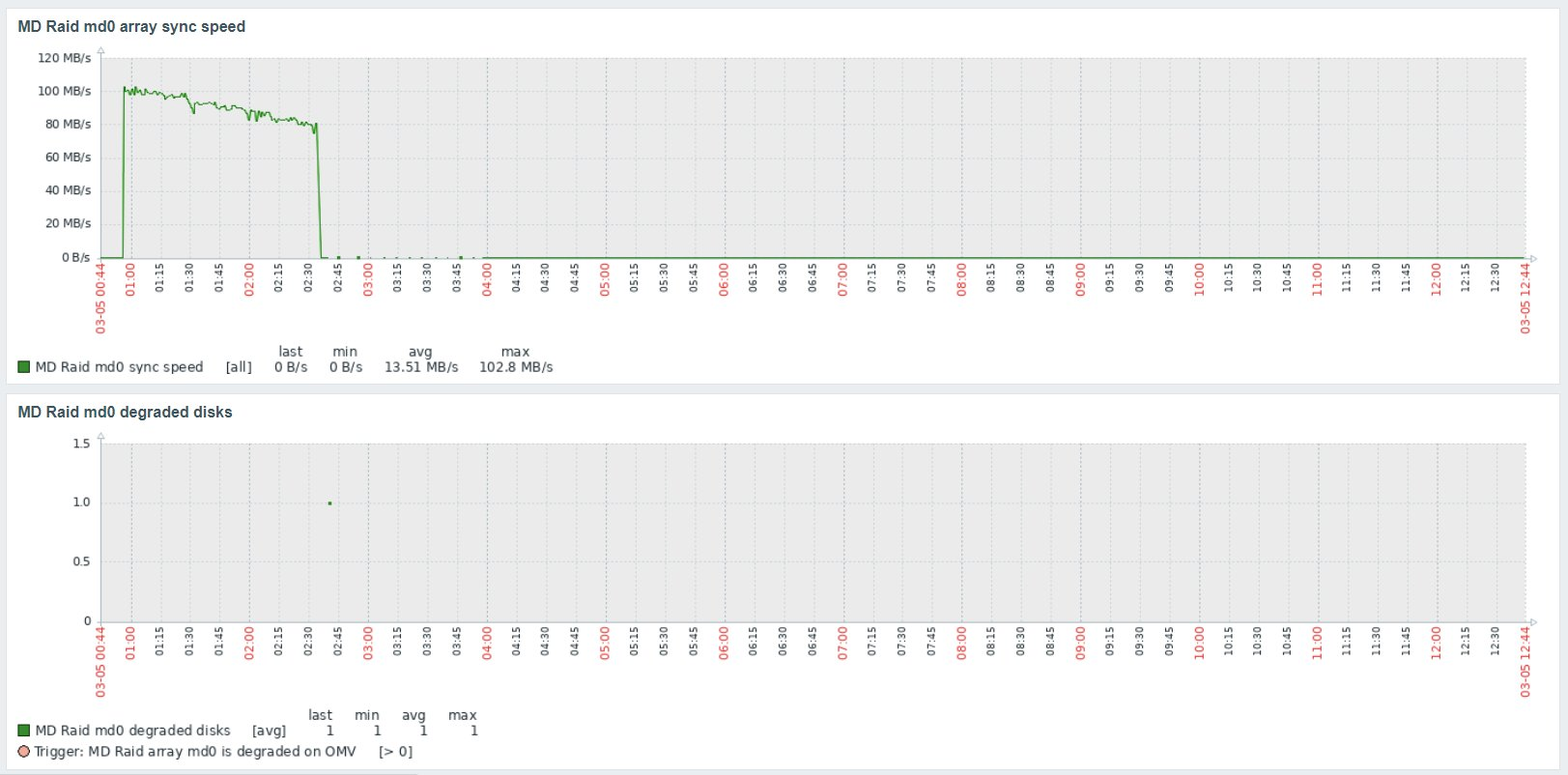

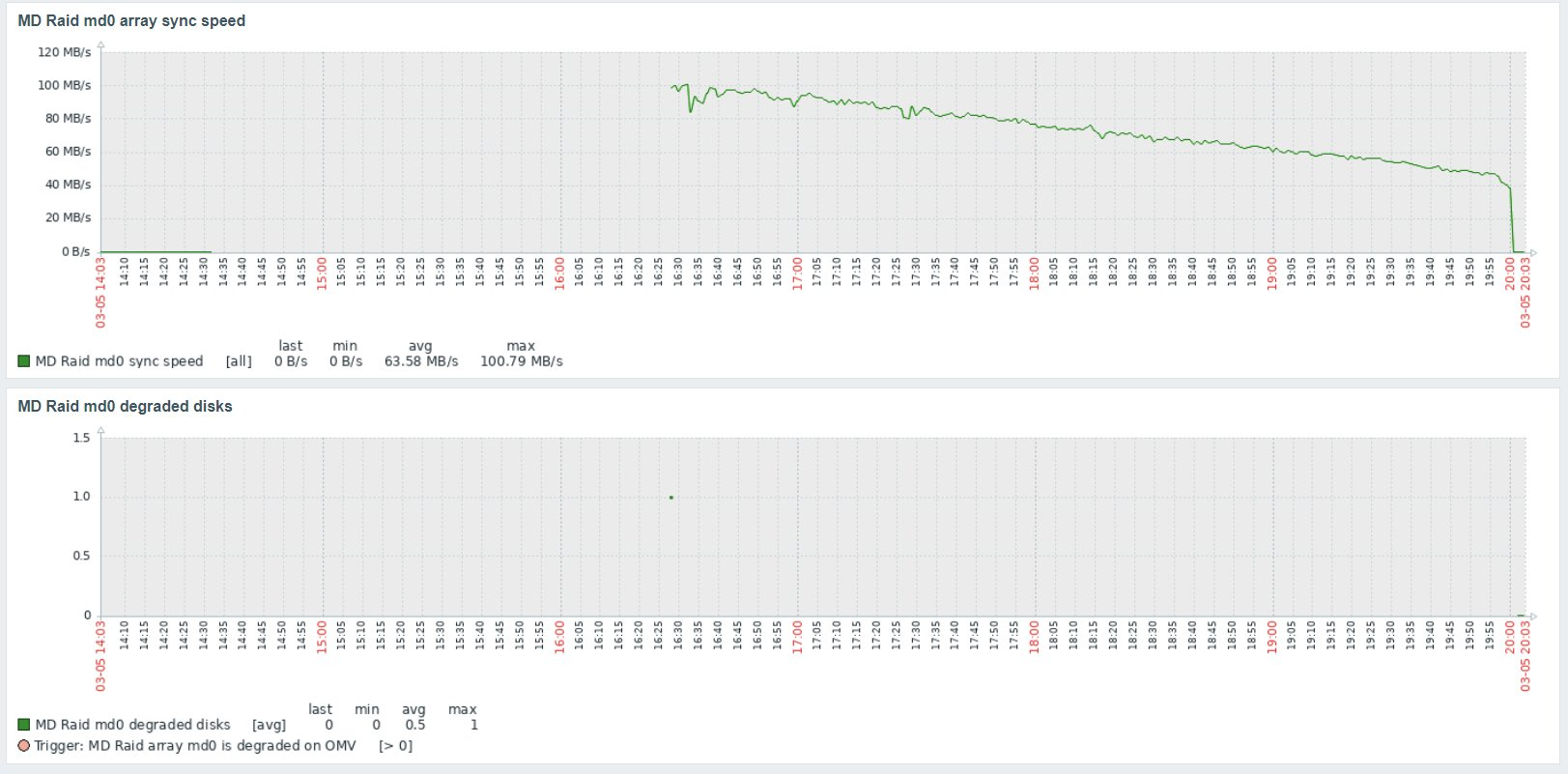

And then... DISASTER. The fourth disk, the same one that just recently got into the RAID5 array, failed just a couple of days after i did the reconfiguration above. These are not good Sunday morning e-mails:

![Zabbix e-mail notification: Problem: [OMV] MD Raid array md0 is degraded on OMV](https://blog.damjanev.com/content/images/2023/08/image.png)

![Zabbix e-mail notification: Problem: SMART [sdd sat]: Error log contains records](https://blog.damjanev.com/content/images/2023/08/image-3.png)

The Disk failed in the middle of an array consistency check:

Some 3 and a half hours of RAID rebuild time and 45€ later... we're back in business.

RAID is not backup

Seriously, it is not. Knowing this, i was looking for a backup solution for my server, preferably an off-site one, from the very beginning. I ended up with a subscription to IDrive. There is a plan called "IDrive Mini" that gives you 500GB of storage for some $9.95 per year. IDrive has backup scripts that run on Linux that are quite easy to setup and can be controlled both locally, from the server or from the web based dashboard.

And then... DISASTER strikes again. After a series of city-wide power failures some data on the filesystems got corrupted. Lucky me, i had an off-site backup to restore the data from. Money well spent. 🔝

What's next?

I'm quite happy with the current setup, it performs very well for the intended purpose. There are just these things that bother me. They do not bother me a lot, but still...

- That USB datastore "solution" seems clunky to me. It even had some weird episodes where the system suddenly just looses the USB device and the datastore. It comes back after some tinkering (remove, replace, poweroff, poweron...), but it is not a pleasant affair. So i decided to do something about it. I got myself one (it still in the mail, travelling from China) Dell Perc H200 IT mode HBA. 2x SAS ports and 2x SAS-to-SATA breakout cables will provide me with 8 new SATA3 connections. This is going to replace the ASM1062 based HBA and all the current disks will go there. I will get myself a couple of small SATA SSDs 120GB or 240GB, not bigger), connect them to the onboard SATA ports and use them as ESXi datastores. There i will host OMV with OS level mdadm raid, as in the beginning. The extra space on the SSD datastores can be used as ESXi level cache, for example.

- The box is really quite thin on CPU and RAM resources. Short of replacing the motherboard (good luck finding a LGA1155 motherboard with 4 DDR3 slots) there is not much i can do. Replacing the whole motherboard/CPU/RAM combo kind of defeats the purpose of re-using old hardware, so it is not in the game. At leas not for now. It bothers me that i have two more RAM sticks (2x4GB) that are currently unusable, but it is what it is. But i can do something CPU-wise. There is one used i7-3770 in the mail (from China, as well) ;-)

- The DHCP and DNS services on my ISP provided home router sucks and i shall do something about it! I have the homeserver, after all. This what it's there for. I will run my own internal DNS and DHCP. But i said i don't like having the network out of service if the homeserver is down, right? Right! So how do we approach this conundrum? I plan to get myself one of those Intel based mini PCs (a refurbished HP EliteDesk 800 G2 or one of those Chinese boxes are an option...) and have the DNS and DHCP server redundant. One instance running as a VM on the ESXi and another running on the mini PC. The plan is to run Proxmox on the mini PC and test it. Who knows, maybe i will end up getting two more mini PCs and do some Proxmox HA ;-)

UPDATE: The MiniPC is here, as well as the HBA and the CPU ;-)

That's all for now. Happy tinkering with your homeservers! 🔝